Part of creating a new insight into ‘Connected Environments’ (more on that term in future posts) is understanding the function, design and nature of devices. The enclosure of a device is all important and in the world of the Internet of Things, often overlooked.

Before delving into casing sensors its worth taking a step to look at kits available online and how they can be adapted to a new form. As such we have used the Pimoroni radio kit (its a great kit) as a first example:

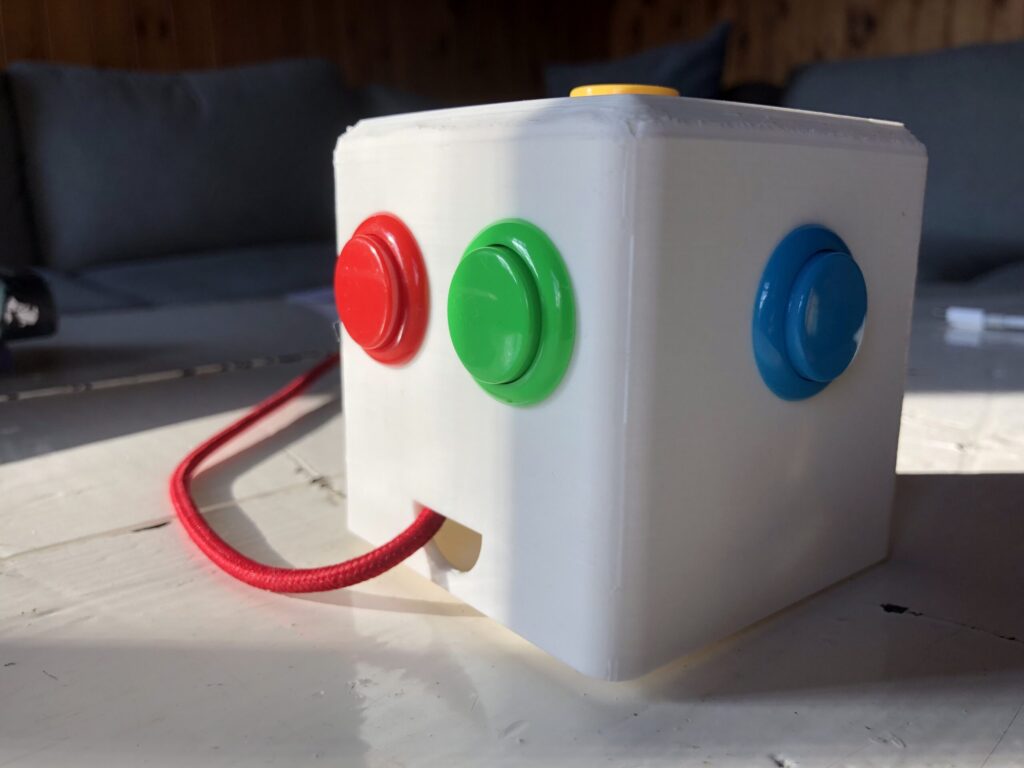

The redesigned radio case was designed to create a small, easy to use radio with decent sound. It uses the PhatBeat header from Pimoroni, which can either be purchased alone or as as part of a Pi Radio kit, full links are below via Thingiverse. It will of course work with any other audio output from a Raspberry Pi Zero.

The speaker has been replaced with a Surface Transducer, allowing a smaller case as well as making use of what ever surface the radio is placed upon to enhance the sound. The transducer is at the bottom of the unit as a small metal disc, placing it on a wooden surface creates the deepest sound, a sound beyond the size of the device.

The buttons are ‘mini arcade’ buttons, aimed at simplifying the operation of the radio – it features play/pause (Yellow), next station (Blue) and Volume up (Red), Volume Down (Green).

There is no power off in the current version as its built to be left powered for instant audio (although the feature is built into the PhatBeat so can be easily extended).

Full details on how to make it, along with the 3D case to print are now available on Thingiverse.

.

Do let us know if you make one…